Wikimedia Commons

Here comes a really long blog post, so I have included a very brief summary at the end of each section. You can read that and go for the long version after if you think you disagree with the statements!

- Defining Life: A Depressing Opinion

Deciding what is alive and what is not is an old human pastime. A topic of my lab’s research is Artificial Life, so we also tend to be interested in defining life, especially at the level of categories. What is the difference between this category of alive things and this category of not-alive things? There are lots of definitions trying to pin down the difference, none of them satisfactory enough to convince everyone so far.

One thing we talked about recently is the theory of top down causation. When I heard it, it sounded like this: big alive things can influence small not-alive things (like you moving the molecules of a table by pushing said table away) and this is top down causation, as opposed to bottom up causation where small things influence big things (like the molecules of the table preventing your fingers to go through it via small scale interactions).

I’m not going to lie, it sounded stupid. When you move a table, it’s the atoms of your hands against the atoms of the table. When you decide to push the table, it’s still atoms in your brain doing their thing. Everything is just small particles meeting other small particles.

Or is it? No suspense, I still do not buy top-down causation as a definition of life. But I do think it makes sense and can be useful for Artificial Intelligence, in a framework that I am going to explain here. It will take us from my personal theory of emergence, to social rules, to manifold learning and neural networks. On the way, I will bend concepts to what fits me most, but also give you links to the original definitions.

But first, what is life?

Well here is my depressing opinion: trying to define life using science is a waste of time, because life is a subjective concept rather than a scientific one. We call “alive” things that we do not understand, and when we gain enough insight about how it works, we stop believing it’s alive. We used to think volcanoes were alive because we could not explain their behaviour. We personified as gods things we could not comprehend, like seasons and stars. Then science came along and most of these gods are dead. Nowadays science doesn’t believe that there is a clear-cut frontier between alive and not alive. Are viruses alive? Are computer viruses alive? I think that the day we produce a convincing artificial life, the last gods will die. Personally, I don’t bother to much about classifying things as alive or not; I’m more interested in questions like “can it learn? How?”, “Does it do interesting stuff?” and “What treatment is an ethical one for this particular creature?”. I’m very interested in virtual organisms — not so much in viruses. Now to the interesting stuff.

Summary: Paradoxically, Artificial Life will destroy the need to define what is “alive” and what is not.

Top-down causation is about scale, as its name indicates. It talks about low level (hardware in your computer, neurons in your brain, atoms in a table) and high level (computer software, ideas in your brain, objects) concepts. Here I would like to make it about dimensions, which is quite different.

Let’s call “low level” spaces with a comparatively larger number of dimensions (e.g. a 3D space like your room) and “high level” spaces with fewer dimensions (like a 2D drawing of your room). Let’s take low level spaces as projections of the high level spaces. By drawing it on a piece of paper, you’re projecting your room on a 2D space. You can draw it from different angles, which means that several projections are possible.

But mathematical spaces can be much more abstract than that. A flock of bird can be seen as a high dimensional space where the position of each bird is represented by 3 values on 3 bird-dependent axes: bird A at 3D position a1 a2 a3, bird B at position 3D b1 b2 b3 etc. That’s 3 axes per bird, already 30 dimensions even if you have only 10 birds! But the current state of the flock can be represented by a single point in that 30D space.

You can project that space onto a smaller one, for example a 2D space where the flock’s state is represented by one point of which position is just the mean of all bird’s vertical and horizontal position. You can see that the trajectory of the flock will look very different whether you are in the low or high dimensional space.

What does this have to do with emergence?

Wikipedia will tell you a lot about the different definitions and opinion about emergence. One thing most people agree about is that an emergent phenomena must be surprising, and arise from interactions between parts of a system. For example, ants finding the shortest path to food is an emergent phenomena. Each ant follows local interactions rules (explore, deposit pheromones, follow pheromones). That they can find the shortest path to a source of food, and not a random long winding way to it, is surprising. You couldn’t tell that it was going to happen just by looking at the rules. And if I told you to build an algorithm inside the ants heads to make them find the shortest path, that’s probably not the set of rules you would have gone for.

I think that all emergent phenomena are things that happen because of interactions in a high dimensional space, but can be described and predicted in a projection of that space. When there is no emergence, no projection is ever going to give a good description and predictability to your phenomenon. Food, pheromones, but also each ant is a part of the high dimensional original system. Each has states that can be represented on axes: ants move, pheromones decay. Ants interact with each other, which mean that their states are not independent from each other. The space can be projected onto a smaller one, where a description with strong predictive power can be found: ants find the shortest path to food. All ants and even the food have been smashed into a single axis. Their path is optimal or not. They might start far from 0, the optimal path, but you can predict that they will end up at 0. If you have two food sources, you can have two axes; the ants closeness tho the optimal solution depends to their position on these axes. Another example is insect architecture: termite mounds, bee hives. The original system includes interactions between particles of building material and individual termites, but can be described in much simpler terms: bees build hexagonal cells. Or take the flock of birds. Let’s say that the flock forms a big swarming ball that follows the flow of hot winds. The 2D projection is a more efficient way to predict the flock’s trajectory than the 30D space, or than following a single bird’s motion (combination of its trajectory inside the swarm-ball and on the hot winds). Of course, depending on what you want to describe, the projection will have to be different.

Here we meet one important rule: if there is emergence, the projection must not be trivial. The axes must not be a simple subset of the original space, but a combination (linear or not) of the axes of the high dimensional space.

This is where the element of “surprise” comes in. This is a rather nice improvement on all the definitions of emergence I’ve found: all talk about “surprise” but most do not define objectively what is considered as surprising. The rule above is a more practical definition than “an emergent property is not a property of any part of the system, but still a feature of the system as a whole” (wikipedia).

Follows a second implicit rule: trajectories (emergent properties) built in low dimensional spaces cannot be ported to high dimensional spaces without modification.

You could try to build “stay in swarm but also follow hot winds” into the head of each bird. You could try to build “find shortest path” into the head of each ant. It makes sense: that is the simplest description of what you observe. The problems starts when you try to build that with what you have in the real, high dimensional world. Each ant has few sensors. They cannot see far away. Implementing a high level algorithm rather than local interactions may sometimes work, but is not the easiest, more robust or more energy efficient solution. If you are building rules that depend explicitly on building high level concepts from low level high dimensional input, you are probably not on the right track. You don’t actually need to implement a concept of “shortest path” or “swarm” to achieve these tasks; research shows that you obtain much better results by giving these up. This is a well known problem in AI: complex high level algorithms do very poorly in the real world. They are slow, noise sensitive and costly.

However, I do not agree that emergent phenomena “must be impossible to infer from the individual parts and interaction”, as anti-reductionists say. Those that fit in the framework I have described so far can theoretically be inferred, if you know how the high dimensional space was projected on the low dimensional one. Therefore you can generate emergent phenomena by drawing a trajectory in the low dimensional space, and trying to “un-project” it to the high dimensional space. By definition you will have several possible choices, but it should not be a too big problem. I intuitively think this generative approach works and I tried it on a few abstract examples; but I need a solid mathematical example to prove it. Nevertheless, if emergent phenomena don’t always have to be a surprise and can be engineered using tools other than insight, it’s excellent news!

Summary: an emergent phenomenon is a simplification (projection to low dimensional space) of underlying complex (high dimensional) interactions that allows to predict something about the system faster than by going through all the underlying interactions, whatever the input.

Random thought that doesn’t fit in this post: If there were emergent properties of the system “every possible algorithm”, the halting problem would be solvable. There is not, so we actually have to run all algorithms with all possible inputs (see also Rice’s theorem).

- Top-Down Causation and Embodied Cognition

So far we’ve discussed about interactions happening between elements of the low level high dimensional system, or between the elements of the high level low dimensional system. It is obvious that what happens down there influences what happens up here. Can what happens upstairs influence what goes on downstairs?

Despite my skepticism at what you can read about top-down causation here and there, I think the answer is yes, in a very pragmatic way: if elements of the high dimensional space take inputs directly from the low dimensional space, top down causation happens. Until now the low-dim spaces were rather abstract, but they can exist in a very concrete way.

Take sensors, for example. Sensors reduce the complexity of the real world in two ways:

– by being imprecise (eyes don’t see individual atoms but their macro properties)

– by mixing different inputs into the same kind of output (although the mechanisms are different, you feel “cold” both when rubbing mint oil or an ice cube on your skin)

This reduction of dimensions is performed before giving your brain the resulting output. Your sensors give you input from a world that is a already a non-trivial projection of a richer world. Although your actions impact the entire, rich, high dimensional world, you only perceive the consequences of it through the projection of that world. Your entire behaviour is based on sensory inputs, so yes, there is some top-down causation going on. You should not take it as a definition for living organisms though: machines have sensors too. “sensor” is actually a pretty subjective word anyways.

You might not be convinced that this is top-down causation. Maybe it sounds to pragmatic and real to be top-down causation and not just “down-down” causation.

So what about this example: social rules. Take a group of humans. Someone decides that when meeting a person you know, bowing is the polite thing to do. Soon everyone is doing it: it has become a social rule. Even when the first person to launch this rule has disappeared from that society, the rule might continue to be applied. It exists in a world that is different from the high dimensional world in formed by all the people in that society, a world that is created by them but has some independence from each of them. I can take a person out and replace them by somebody from a different culture — soon, they too will be bowing. But if I take everyone out and replace them by different people, there is no chance that suddenly everyone will start bowing. The rule exists in a low dimension world that is inside the head of each member of the society. In that projection, each person that you have seen bowing in your life is mashed up in an abstract concept of “person” and used in a rule saying “persons bow to each other”. This low dimensional rule directs your behaviour in a high dimensional world where each person exists as a unique element. It’s bottom up causation (you see lots of people bowing and deduce a rule) that took its independence (it exists even if the original person who decided the rule dies, and whether or not some rude person decides not to bow) and now acts a top down causation (you obey the rule and bow to other people). When you bow, you do not do it because you remember observing Mike bow to John and Ann bow to Peter and Jake bow to Mary. You do it because you remember that “people bow to other people”. It is definitely a low dimensional, general rule dictating your interactions as a unique individual.

We have seen two types of top-down causation. There is a third one, quite close to number one. It’s called embodied cognition.

Embodied cognition is the idea that some of the processing necessary to achieve a task are delegated to the body of an agent instead of putting all the processing load on its brain. It is the idea that the through interactions with the environment, the body influences cognition, most often by simplifying it.

My favourite example is the swiss robot. I can’t find a link to the seminal experiment, but it’s a small wheeled robot that collects objects in clusters. This robot obeys fixed rules, but the results of its interaction with the environment depends on the placement of its “eyes” on the body of the robot. With eyes on the side of its body, the robots “cleans up” its environment. For other placements, this emergent behaviour does not appear and the robot randomly moves objects around.

Although the high level description of the system does not change (a robot in an arena with obstacles), changes in the interactions of the parts of the system (position of the eyes on the body) change the explanatory power of the projection. In one case, the robot cleans up. In the others, the behaviour defies prediction in a low dimensional space (no emergence). Here top-down causation works from the body of the robot to its interactions in a higher dimensional world. The low dimension description is “a robot and some obstacles”. The robot is made of physically constrained parts: its body. This body is what defines whether the robot can clean up or not — not the nature of each part, but how they are assembled. For the same local rules, interactions between the robot and the obstacles depend on the high level concept of “body”, not only on each separate part. The swiss robot is embodied cognition by engineered emergence.

In all the systems with top-down causation I have described so far, only one class falls into the anti-reductionist framework. Those where the state of the high level space are historically dependent on the low level space. These are systems where states in the low dimensional world depends not only on the current state of the high dimensional one, but also on its past states. If on top on that the high dimensional world takes input from the low dimensional one (for example because directly taking high dimensional inputs is too costly), then the system’s behaviour cannot be described only by looking at the interactions in the high dimensional world.

Simple example: some social rules depend not only on what people are doing now, but on what they were doing in the past. You wouldn’t be able to explain why people still obey archaic social rules just by looking at their present state, and these rules did not survive by recording all instances of people obeying them (high dimensional input in the past), but by being compressed into lower dimensional spaces and passed on from person to person in this more digestible form.

This top-down causation with time delay cannot be understood without acknowledging the high level, low dimensional world. It is real, even if it only exists in people’s head. That low dimensional world is where the influence of the past high dimensional world persists even if it has stopped in the present high dimensional world. Maybe people’s behaviour cannot be reduced to only physical laws after all… But there is still no magic in that, and we are not getting “something from nothing” (a pitfall with top-down causation).

A counter argument to this could be that everything is still governed by physical laws, both people and people’s brain, and lateral causation at the lowest level between elementary particles can totally be enough to explain the persistence of archaic social rules and therefore top-down causation does not need to exist.

I agree. But as soon as you are not looking at the lowest level possible, highest dimensional world (which currently cannot even be defined), top-down causation does happen. Since I am not trying to define life, this is fine with me!

Summary: Top-down causation exists when the “down” takes low dimensional input from the “top”. The key here is the difference in dimensions of the two spaces, not a perceived difference of “scale” as in the original concept of top-down causation. Maybe I should call it low-high causation?

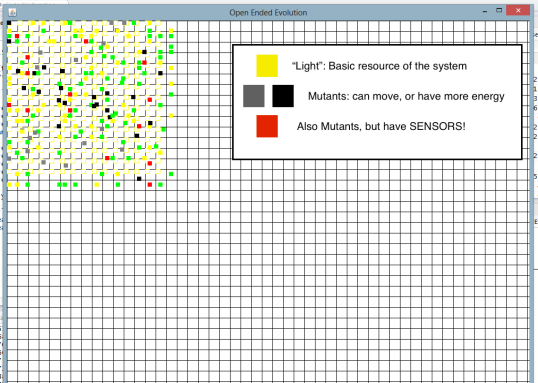

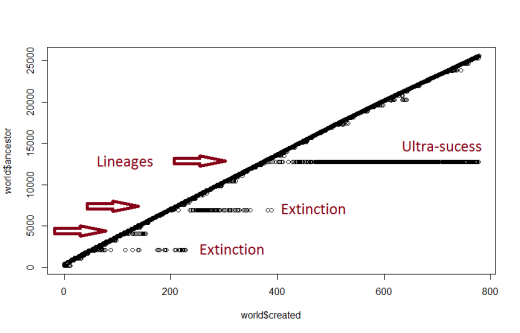

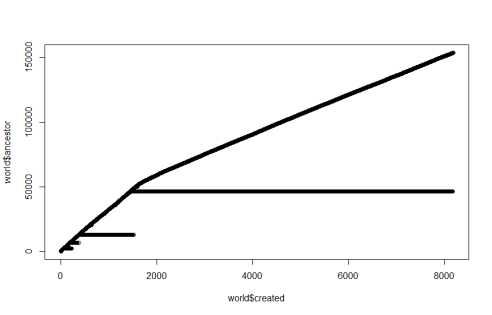

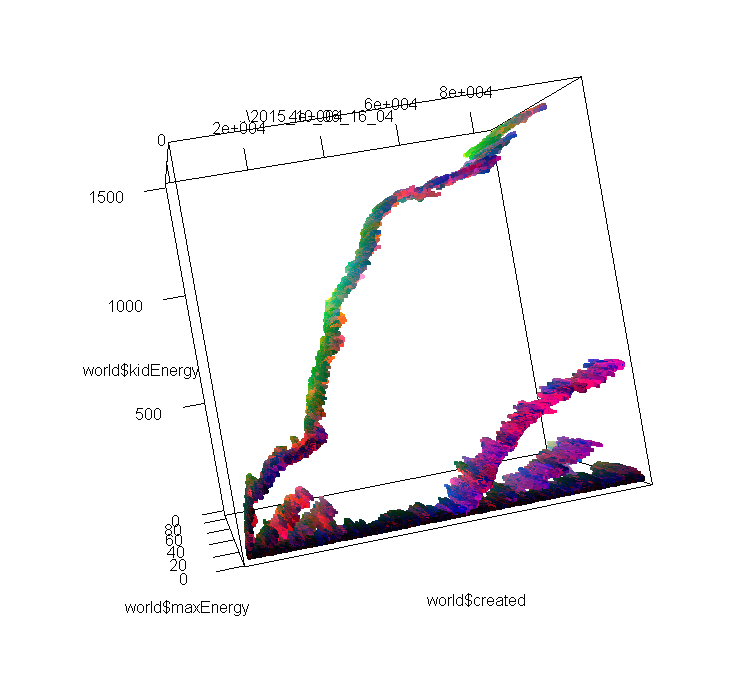

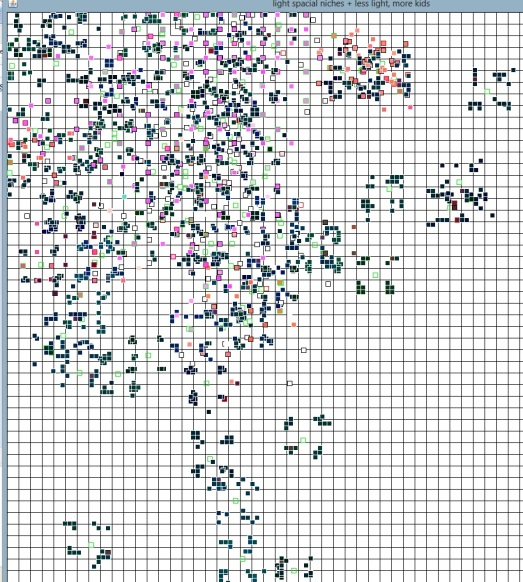

In this section I go back to my pet subject, Open Ended Evolution and the Interface Theory of Perception. You probably saw it coming when I talked of imprecise sensors. I define the relationship between OEE and top-down causation as: An open ended system is one where components take inputs from changing projected spaces. It’s top-down causation in a billion flavors.

These changes in projections are driven by interactions between the evolution of sensors and the evolution of high dimensional outputs from individuals.

Two types of projections can make these worlds interesting:

1. sensory projection (see previous section)

2. internal projections (in other words, brains).

The theoretical diversity of projections n.1 depends of the richness of the real world. How many types of energy exist, can be sensed, mixed and used as heuristics for causation?

N.2 depends on n.1 of course (with many types of sensors you can try many types of projections), but also on memory span and capacity (you can use past sensor values as components of your projections). Here, neurons are essentially the same as sensors: they build projections, as we will see in the next section. The main difference is that neurons can be plastic: the projections they build can change during your lifetime to improve your survival (typically, changes in sensors decrease your chances of survival…).

As usual, I think that the secret ingredient to successful OEE is not an infinitely rich physical world, even if it helps… Rather, the richness of choice of projected spaces (interfaces) is important.

I will not go into great details in this section because it is kind of technical and it would take forever to explain everything. Let’s just set the atmosphere.

I was quite shocked the other day to discover that layered neural networks are actually the same thing as space projection. It’s so obvious that I’m not sure why I wasn’t aware of it. You can represent a neural network as a matrix of weights, and if the model is a linear one, calculate the output to any input by multiplying the input by the weight matrix (matrix multiplication is equivalent to space projection).

The weight matrix is therefore quite important: it determines what kind of projection you will be doing. But research has shown that when you are trying to apply learning algorithms to high dimensional inputs, even a random weight matrix improves the learning results, as long as it reduces the dimension of the input (you then apply learning to the low dimensional input).

Of course, you get even better results by optimizing the weight matrix. But then you have to learn the weight matrix first, and only then apply learning to the low dimensional inputs. That is why manifold learning has been invented, it seems. It finds you a good projection instead of using random stuff. Then you can try to use that projection to perform tasks like clustering and classification.

What would be interesting is to apply that to behavioural tasks (not just disembodied tasks) and find an equivalent for spiking networks. One possible way towards that is prediction tasks.

Say you start with a random weight matrix. You goal is to learn to predict what projected input will come after the current one. For that, you can change your projection: two inputs that you thought were equivalent because they got projected at the same point, but ended up having different trajectories, were probably not equivalent to begin with. So you change your projection as to have these two inputs projected at different places.

From this example we can see several things:

– Horror! layers will become important. Some inputs might require a type of projection, and some others a different type of projection. This will be easier implemented if we have layers (see here).

– A map of the learned predictions ( = input trajectories) will be necessary at each layer to manage weight updates. This map can take the form of a Self Organising Map, or more complex, a (possibly probabilistic) vector field where each vector points to the next predicted position. There are as many axes as neurons in the upper layer, and axes have as many values as neurons have possible values. (This vector field can actually be itself represented by a layer of neurons, with each lateral connection representing a probabilistic prediction). Errors in the prediction drive changes in the input weights to the corresponding layer (would this be is closer to Sanger’s rule than to BackProp?). Hypothesis: the time dependance of this makes it possible to implement using Hebbian learning.

– Memory neurons with defined memory span can improve prediction for lots of tasks, by adding a dimension to the input space. It can be implemented simply with LSTM in non-spiking models of NN, or with axonal delays for spiking models.

– Layers can be dynamically managed by adding connections when necessary, or more reasonably deleting redundant ones (neurons that have the exact same weights as a colleague can replace said weights by a connection to that colleague)

– Dynamic layer management will make a feedforward network into a recurrent one, and the topology of the network will transcend layers (some neurons will develop connections bypassing the layer above to go directly to the next). The only remain of the initial concept of layers will be the prediction vector map.

– Memory neurons in upper layers with connections towards lower layers will be an efficient tool to reduce the total number of connections and computational cost (See previous sections. Yes, top-down causation just appeared all of a sudden).

– Dynamic layer management will optimise resource consumption but make learning new things more difficult with time.

– To make the difference between a predicted input and that input actually happening, a priming mechanism will be necessary. I believe only spiking neurons can do that, by raising the baseline level of the membrane voltage of the neurons coding for the predicted input.

– Behaviour can be generated (1), as the vector field map tells us where data is missing or ambiguous and need to be collected.

– Behaviour can be generated (2), because if we can predict some things we can have anticipatory behaviour (run to avoid incoming electric shock)

Clustering and classification are useless in the real world if they are not used to guide behaviour. Actually, the concept of class is subjective to the behaviour it supports; here we take “prediction” as a behaviour, but the properties you are trying to describe or predict depends on which behaviour you are trying to optimise. The accuracy or form of the prediction depends on your own experience history, and the input you get to build predictions from depends on your sensors… Here comes embodied cognition again.

Summary: predictability and understanding are the same thing. Predictability gives meaning to things and thus allows to form classes. The difference between deep learning and fully recurrent networks is top-down causation.

It might be tempting to generalise top-down causation. Maybe projecting to lower dimensions is not that important? Maybe projecting to different spaces with the same number of dimensions, or projecting to higher dimensional spaces, enhances predictability. After all, top-down projection in layered networks is equivalent to projecting low dimensional input to higher dimensional space (see also auto-encoders and predictive recurrent neural networks). But if our goal is to make predictions in the less costly way possible (possibly at the cost of accuracy), then of course projection to lower dimensional spaces is a necessity. When predictions must be converted back to behaviour, projection to high dimensional spaces becomes necessary; but in terms of memory storage and learning optimisation, the lowest dimensional layer is the one that allows reduction of storage space and computational cost (an interesting question here is, what happens to memory storage when the concept of layer is destroyed?).

One possible exception would be time (memory). If knowing the previous values of an input improves predictability and you add neuron(s) to keep past input(s) as memory, you are effectively increasing the number of dimensions of your original space. But you use this memory for prediction (i.e. low dimensional projection) in the end. So yes, the key concept is dimension reduction.

A nice feature of this theory is that models would be able to capture causal relationships (something that deep learning cannot do, I heard). This whole post was about a concept called “top-down causation” after all. If an input improves predictability of trajectories in the upper layer, certainly this suggests a causal relationship. So what the model is really doing all the way is learning causal relationships.

Summary: dimension reduction is the key to learning causal relationships.

Wow, that was a long post! If you have come this far, thank you for your attention and don’t hesitate to leave a thought in the comments.